NEW [March 3, 2021] We've updated the web-based data visualization links: [Before Merging (raw human annnotated data)] and [After Merging (final data in PartNet)]. Change to different PartNet IDs for different shapes.

NEW [Oct 25, 2020] We've released the transformation matrices to align ShapeNet-v1 models to the PartNet space for chairs, tables, storage furnitures and beds HERE (4x4 transformation matrix, stored as row-first). For other categories, please run THIS CODE by yourself. This alignment might fail in rare cases, but fortunately this can be detected. Check the code comments for more information. Hope this is helpful!

[April 1, 2020] We release the pretrained models for PointCNN part semantic segmentation here (4.5G).

[Oct 5, 2019] The authors of this paper enrich our PartNet dataset with their binary symmetry hierarchies. Please refer to this link to download the data. Please cite both our paper and their paper if you use this data. Disclaimer: This is a separate effort done by Nanjing University and National University of Defense Technology (NUDT). Please directly contact Kevin Xu for questions and instructions. As part of our StructureNet paper, we release n-ary part hierarchies for PartNet models.

[July 11, 2019] We release the pretrained models for the proposed part instance segmentation method here (1.3G).

[April 19, 2019] We release our 3D web-based annotation system here. Please watch this video for reference.

[March 29, 2019] Updated prelease of PartNet v0 with better organization, visualizations, and separate downloads for HDF5 file sfor semantic and instance segmentation tasks. Github repo and Data download page are updated.

[March 12, 2019] We release PartNet v0 (pre-release version) as a part of ShapeNet effort. Please register as a ShapeNet user first in order to download. We provide meta-files and scripts on this Github repo. If you have any questions, please post issues on the github page. If you have general feedbacks, please fill in this form to let us know. If you observe any data annotation error, please fill in this errata to help improve PartNet. We will release stable version v1 later and will host PartNet challenges on v1.

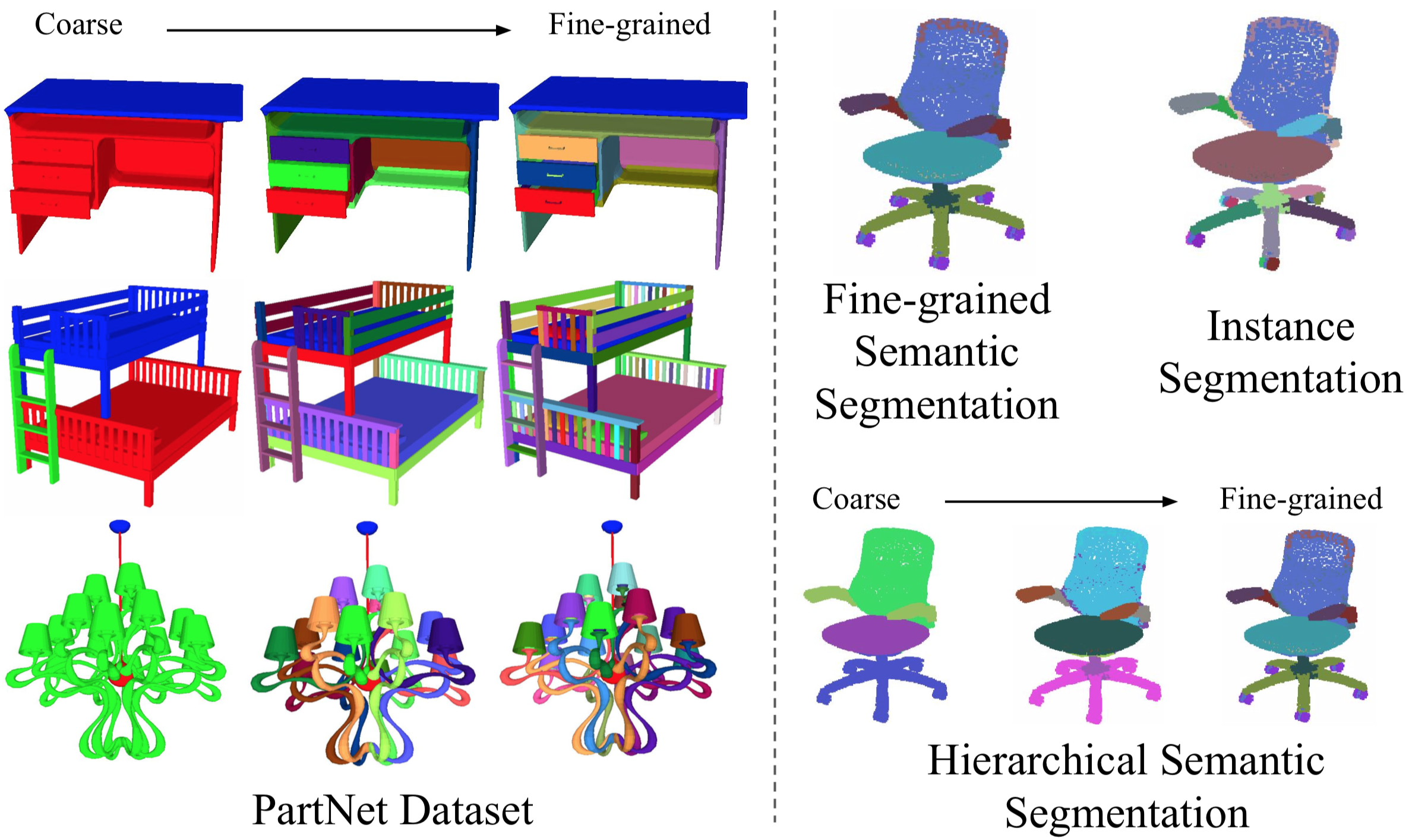

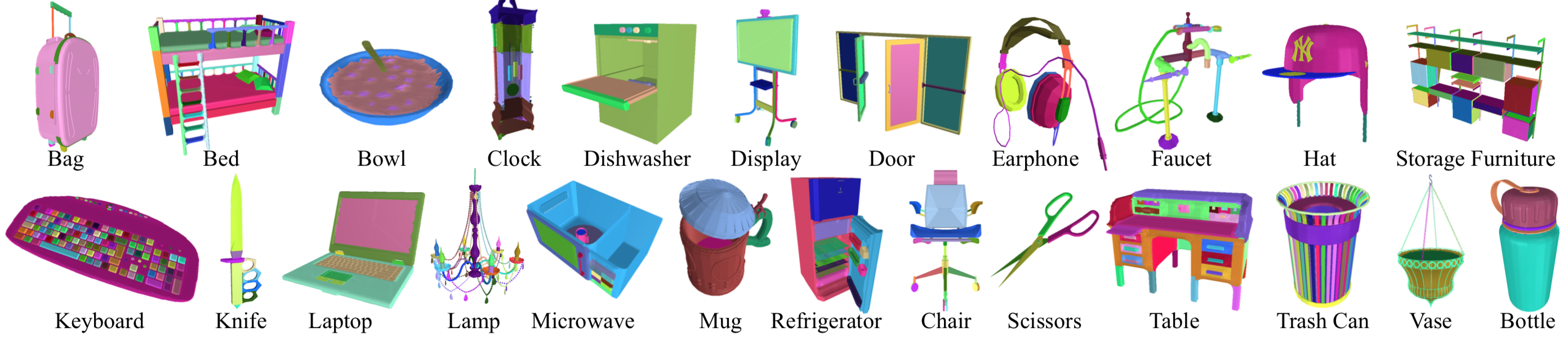

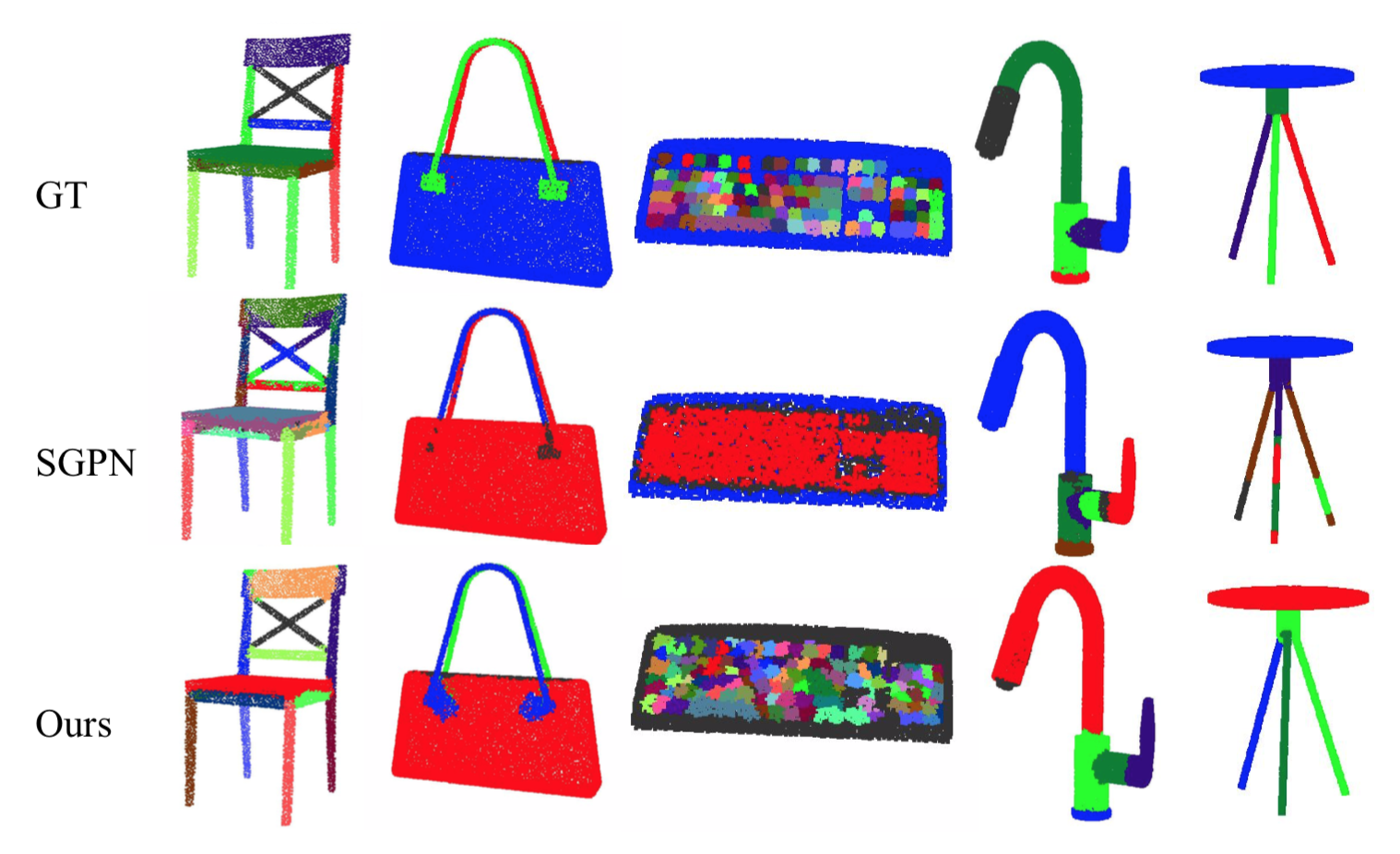

We present PartNet: a consistent, large-scale dataset of 3D objects annotated with fine-grained, instance-level, and hierarchical 3D part information. Our dataset consists of 573,585 part instances over 26,671 3D models covering 24 object categories. This dataset enables and serves as a catalyst for many tasks such as shape analysis, dynamic 3D scene modeling and simulation, affordance analysis, and others. Using our dataset, we establish three benchmarking tasks for evaluating 3D part recognition: fine-grained semantic segmentation, hierarchical semantic segmentation, and instance segmentation. We benchmark four state-of-the-art 3D deep learning algorithms for fine-grained semantic segmentation and three baseline methods for hierarchical semantic segmentation. We also propose a novel method for part instance segmentation and demonstrate its superior performance over existing methods.

Figure 1. PartNet Dataset and Three Benchmarking Tasks. Left: we show example annotations at three levels of segmentation in the hierarchy. Right: we propose three fundamental and challenging segmentation tasks and establish benchmarks using PartNet. |

Figure 2. PartNet Fine-grained Instance-level Shape Part Annotation. We visualize example shapes with fine-grained part annotations for the 24 object categories in PartNet. |

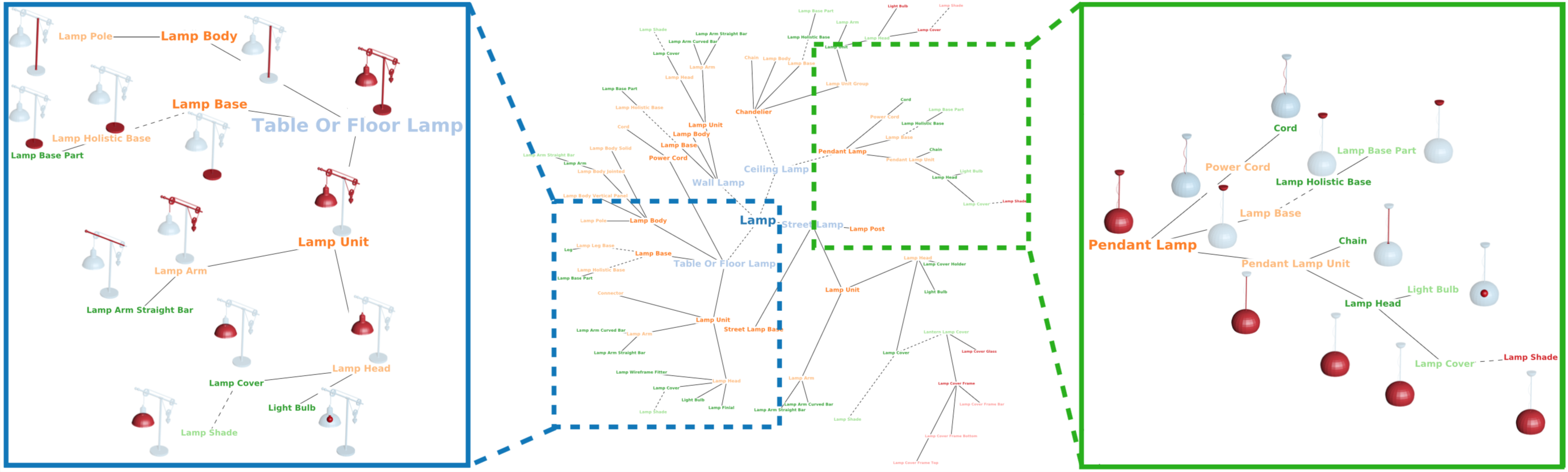

Figure 3. PartNet Hierarchical Shape Part Template and Annotation. We show the expert-defined hierarchical template for lamp (middle) and the instantiations for a table lamp (left) and a ceiling lamp(right). The And-nodes are drawn in solid lines and Or-nodes in dash lines. The template is deep and comprehensive to cover structurallydifferent types of lamps. In the meantime, the same part concepts, such as light bulb and lamp shade, are shared across the different types. |

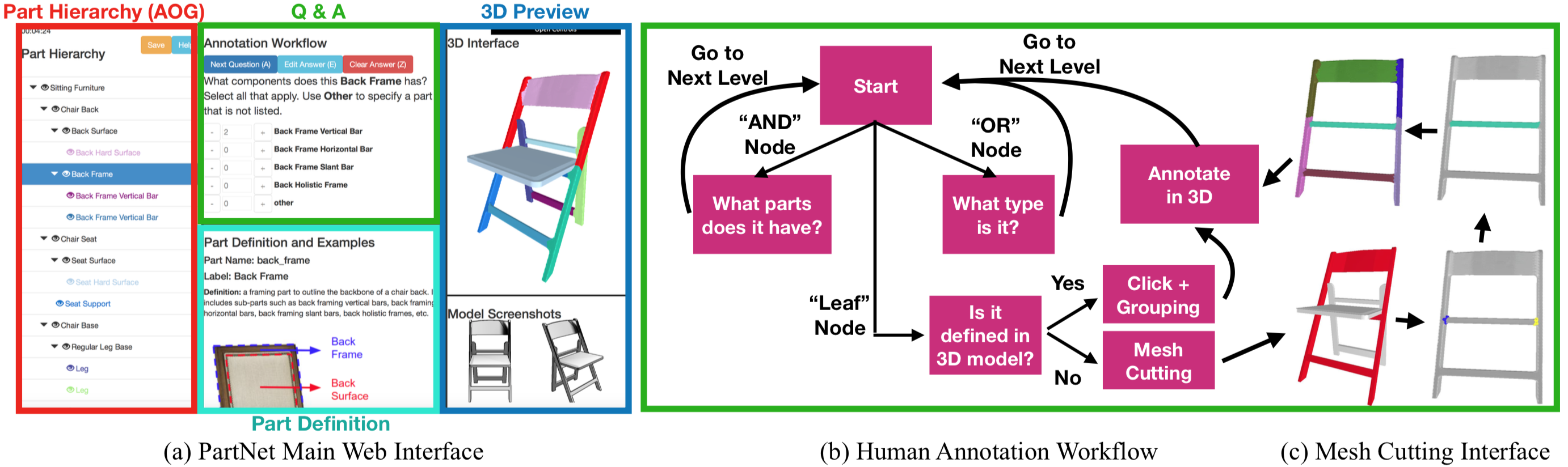

Figure 4. Data Annotation Workflow. We show our annotation interface with its components, the proposed question-answering workflow and the mesh cutting interface. |

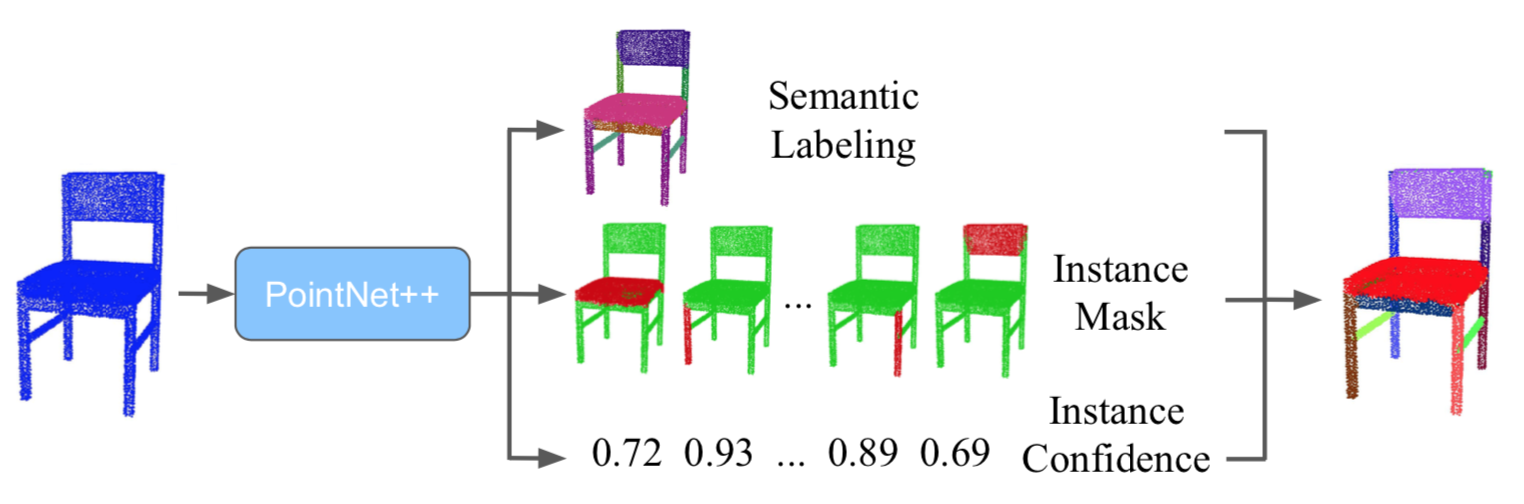

Figure 5. The Proposed Method for Part Instance Segmentation. The network learns to predict three components: the semantic label for each point, a set of disjoint instance masks and their confidence scores for part instances. |

Figure 6. Qualitative Comparison To SGPN. We show qualitative comparisons for our proposed method and SGPN. Our method produces more robust and cleaner results than SGPN. |

Figure 7. Learned Part Instance Correspondences. Our proposed method learns structural priors within each object category that is more instance-aware and robust in predicting complete instances. We observe that training for a set of disjoint masks across multiple shapes gives us consistent part instances. The corresponding parts are marked with the same color. |

This research was supported by NSF grants CRI-1729205, IIS-1763268, and CHS-1528025, a Vannevar Bush Faculty Fellowship, a Google fellowship, and gifts from Autodesk, Google and Intel AI Lab. We especially thank Zhe Hu from Hikvision for the help on data annotation and Linfeng Zhao for the help on preparing hierarchical templates. We appreciate the 66 annotators from Hikvision, Ytuuu and Data++ on data annotation. We also thank Ellen Blaine for helping with the narration of the video.